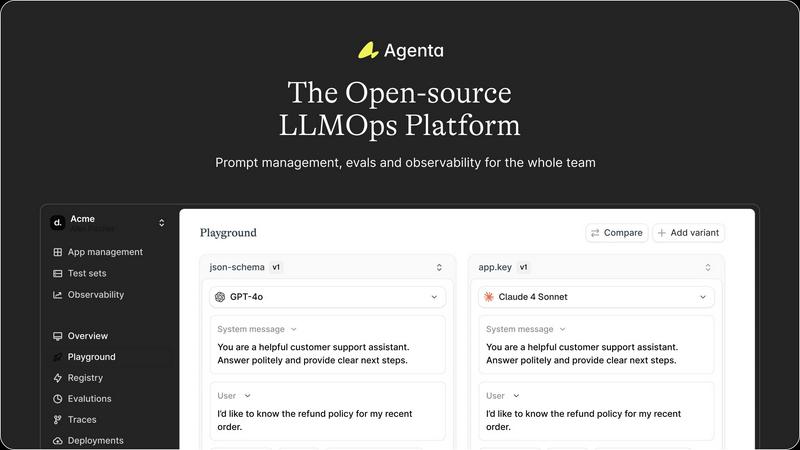

Agenta

Agenta is the open-source LLMOps platform that helps your team build and manage reliable AI apps together!.

Visit

About Agenta

Agenta is the dynamic, open-source LLMOps platform designed to transform how AI teams build and ship reliable, production-ready LLM applications! It tackles the core chaos of modern LLM development head-on, where prompts are scattered, teams work in silos, and debugging feels like a guessing game. Agenta provides a unified, collaborative hub where developers, product managers, and subject matter experts can finally work together seamlessly. It centralizes the entire LLM workflow, enabling teams to experiment with prompts and models, run rigorous automated and human evaluations, and gain deep observability into production systems. The core value proposition is powerful: move from unpredictable, ad-hoc processes to a structured, evidence-based development cycle. By integrating prompt management, evaluation, and observability into one platform, Agenta empowers teams to iterate faster, validate every change, and confidently deploy LLM applications that perform consistently and reliably. It's the single source of truth your whole team needs to turn the unpredictability of LLMs into a competitive advantage!

Features of Agenta

Unified Experimentation Playground

Ditch the scattered scripts and documents! Agenta's unified playground lets you compare different prompts, parameters, and models from various providers side-by-side in real-time. See immediate results, track a complete version history of every iteration, and debug issues using real production data. It's your central command center for rapid, informed experimentation, freeing you from vendor lock-in and chaotic workflows!

Automated & Flexible Evaluation Framework

Replace gut feelings with hard evidence! Agenta's evaluation system allows you to create a rigorous, automated testing process for your LLM applications. Integrate LLM-as-a-judge, use built-in metrics, or plug in your own custom code as evaluators. Crucially, you can evaluate the full trace of an agent's reasoning, not just the final output, and seamlessly incorporate human feedback from domain experts into your evaluation workflow!

Production Observability & Debugging

Gain crystal-clear visibility into your live applications! Agenta traces every single LLM request, allowing you to pinpoint exact failure points in complex chains and agentic workflows. You can annotate traces with your team, gather user feedback, and—with a single click—turn any problematic trace into a test case for your playground. Monitor performance and catch regressions automatically with live, online evaluations!

Collaborative Workflow for Whole Teams

Break down the silos between engineers, PMs, and domain experts! Agenta provides a safe UI for non-coders to edit prompts, run experiments, and compare results. Empower product managers and subject matter experts to conduct evaluations directly, bringing everyone into a unified development loop. With full parity between its API and UI, Agenta integrates both programmatic and interactive workflows into one collaborative hub!

Use Cases of Agenta

Rapid Prototyping and Prompt Engineering

Accelerate your initial development phase! Teams can use Agenta's playground to rapidly prototype different LLM approaches, test countless prompt variations, and compare model outputs from OpenAI, Anthropic, and others simultaneously. This structured experimentation cuts down development time and helps you find the most effective configuration before writing extensive code!

Building Evaluation Pipelines for QA

Establish a robust quality assurance process for your AI features! Use Agenta to build automated evaluation pipelines that run every time you update a prompt or model. Combine LLM judges with custom code checks to measure accuracy, tone, safety, and more. This ensures every deployment is validated against a comprehensive test suite, preventing performance regressions!

Debugging Complex Production Issues

Solve mysteries in live applications quickly! When a user reports a strange or incorrect AI response, engineers can use Agenta's observability to trace the exact request, examine every intermediate step in an agent's reasoning, and identify the failure point. They can then instantly save that trace as a test case and iterate on a fix in the playground, closing the feedback loop rapidly!

Enabling Cross-Functional AI Development

Facilitate collaboration between technical and non-technical stakeholders! A financial services company can have their compliance experts use Agenta's UI to directly tweak prompts for regulatory wording, while product managers run A/B evaluations on different response formats. This ensures the final application benefits from diverse expertise without bottlenecking engineering!

Frequently Asked Questions

Is Agenta really open-source?

Yes, absolutely! Agenta is fully open-source under the Apache 2.0 license. You can dive into the code on GitHub, self-host the platform, and even contribute to its development. This gives you full control, transparency, and avoids any vendor lock-in for your core LLM operations infrastructure!

How does Agenta integrate with existing frameworks?

Agenta is designed for seamless integration! It works natively with popular frameworks like LangChain and LlamaIndex, and supports any LLM provider (OpenAI, Anthropic, Cohere, etc.) or open-source model. You can integrate Agenta's SDK into your existing codebase with minimal changes, allowing you to add structured experimentation and observability without a full rewrite!

Can non-developers really use Agenta effectively?

They sure can! A key design goal of Agenta is to democratize LLM development. The platform provides an intuitive web UI that allows product managers, domain experts, and other non-coders to safely edit prompts, run experiments in the playground, and review evaluation results. This bridges the gap between AI engineering and subject matter expertise!

What does the "full trace" evaluation mean?

It means you can evaluate every step of a complex AI agent's process, not just its final answer! For an agent that does web search, reasoning, and then answers, Agenta can capture and evaluate each intermediate thought and action. This is crucial for debugging where things went wrong and for improving the reliability of sophisticated, multi-step LLM applications!

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs