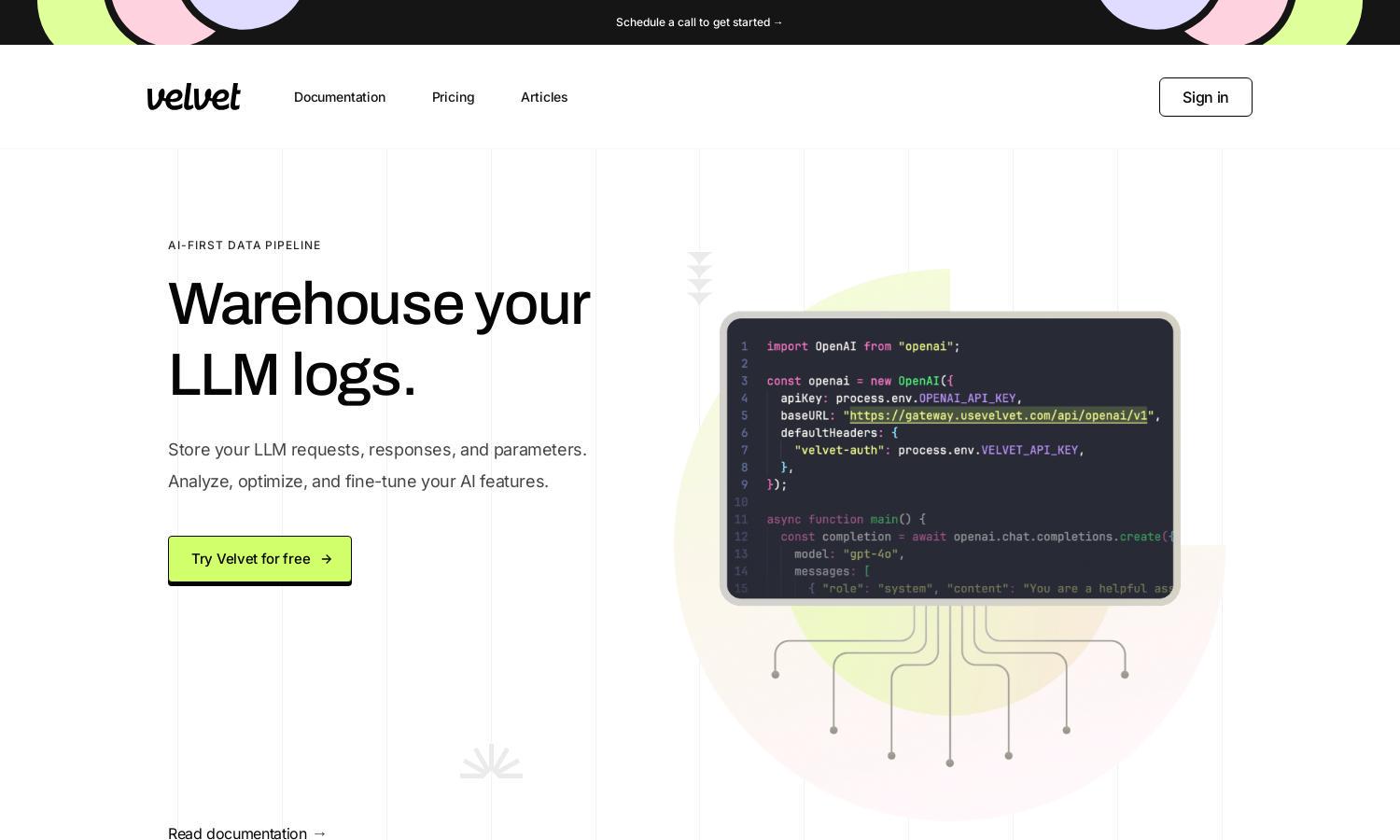

Velvet

About Velvet

Velvet is an innovative AI gateway designed for engineers seeking seamless integration of OpenAI and Anthropic APIs into their workflows. It simplifies logging requests, optimizing performance, and running experiments with just two lines of code, providing valuable insights and enhancing functionality for data-driven decision-making.

Velvet offers a flexible pricing structure where users can start for free and unlock advanced features as needed. With the capacity for up to 10k requests per month without charge, users can seamlessly transition to optimized tiers that enhance logging, caching, and experimenting functions to maximize value.

Velvet’s user interface is designed for simplicity and efficiency, enabling engineers to easily navigate features and tools. The layout emphasizes user-friendliness, fostering an intuitive browsing experience that encourages exploration of key functionalities like request logging, performance analysis, and experimental frameworks.

How Velvet works

Users begin their experience with Velvet by creating an account, followed by reading documentation for setup instructions. Once integrated with their database, they can log every API request made to OpenAI and Anthropic endpoints. Users benefit from customizable data storage and caching capabilities, enhancing usability.

Key Features for Velvet

Request Logging

Velvet’s request logging feature uniquely enables engineers to warehouse every API call made to PostgreSQL. This functionality empowers users to analyze data, calculate costs effectively, and fine-tune their AI models, providing invaluable insight into usage patterns and performance.

Optimized Caching

With Velvet's caching feature, users can significantly reduce API costs and latency. This intelligent system allows for the efficient management of requests, enabling engineers to streamline operations and enhance performance while maintaining complete transparency and control over API interactions.

Experiment Framework

Velvet's experiment framework is designed to support engineers in testing various models and settings efficiently. Users can run both one-off and continuous experiments on selected datasets, allowing for comprehensive evaluation and optimization of AI outputs, thus enhancing model performance.

You may also like: