LangWatch

About LangWatch

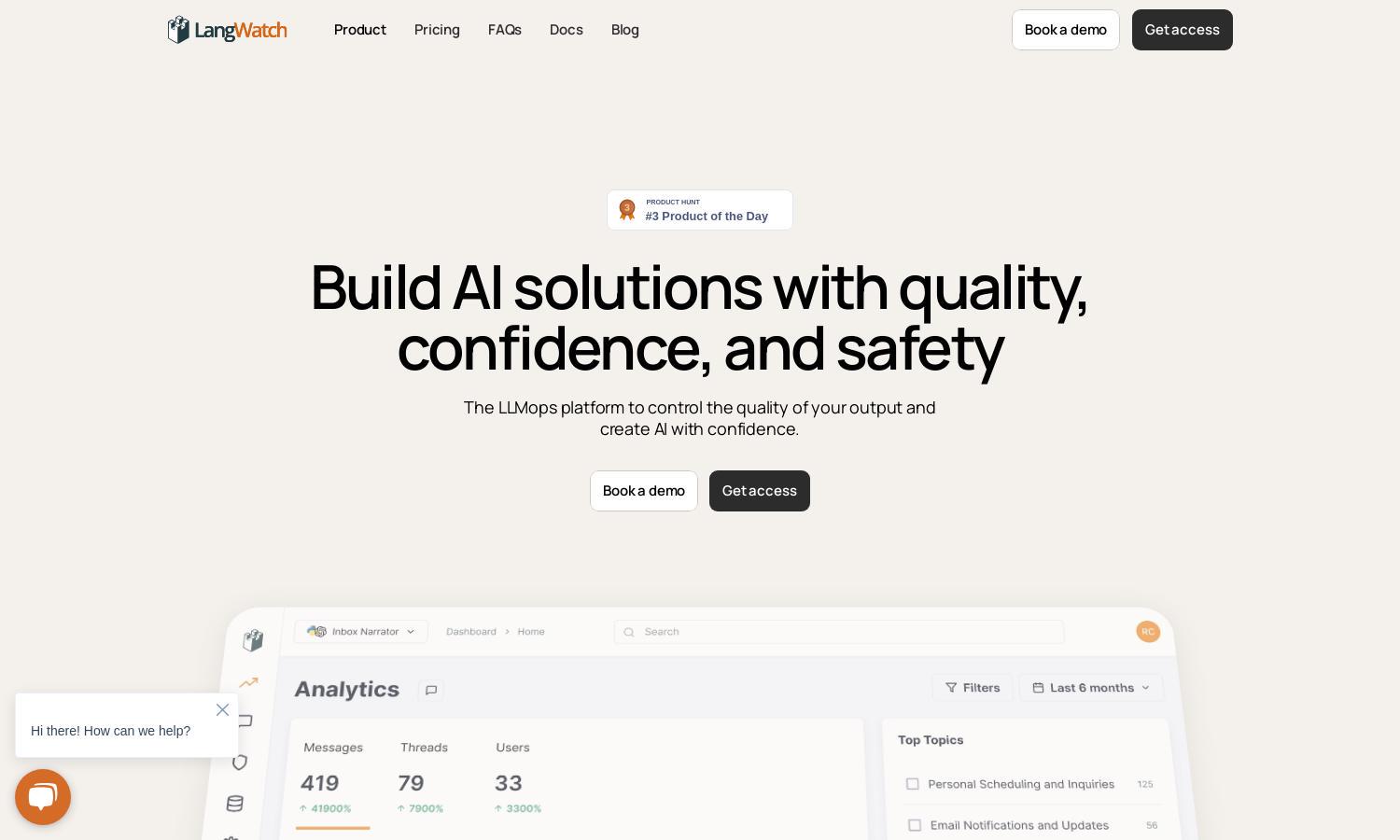

LangWatch is an LLMops platform aimed at businesses leveraging generative AI. It enhances output quality through real-time monitoring, evaluation libraries, and user feedback mechanisms. By addressing AI risks such as hallucinations, LangWatch empowers companies to create reliable AI solutions that align with market demands.

LangWatch offers flexible pricing plans starting from a free tier to custom enterprise solutions. The free plan includes 1 project and 1,000 messages, while the Growth plan at €99/month supports 2 projects and 10,000 messages. Upgrade benefits include more features, enhanced support, and custom analytics.

The user interface of LangWatch is designed for simplicity and efficiency, ensuring a seamless browsing experience. With intuitive navigation and user-friendly features, it allows users to easily access functionalities such as monitoring and evaluation, enhancing their ability to manage AI outputs effectively.

How LangWatch works

Users interact with LangWatch by first signing up and integrating their LLM model with the platform. After onboarding, they can seamlessly navigate through features like real-time monitoring, automated prompt optimization, and quality assessments. The platform allows easy access to performance metrics, helping users improve their AI solutions efficiently.

Key Features for LangWatch

Automated Prompt Optimization

LangWatch's Automated Prompt Optimization feature allows users to maximize the performance of their AI models without needing extensive data science expertise. This unique capability automatically identifies the best prompts, significantly enhancing output quality and reducing the time spent on manual optimization.

Real-time Monitoring

Real-time Monitoring within LangWatch enables users to track AI performance continuously. This feature provides instant insights into outputs and user interactions, allowing for immediate corrective actions to maintain quality and reliability in generative AI applications.

Quality Control Evaluations

LangWatch’s Quality Control Evaluations feature offers comprehensive assessments of AI outputs. Users can run automatic evaluations across various scenarios, ensuring that results meet desired quality standards, ultimately fostering trust and reliability in their generative AI solutions.

You may also like: