ggml.ai

About ggml.ai

ggml.ai is a revolutionary tensor library that boosts machine learning performance on commodity hardware, making advanced models accessible to all developers. With features like automatic differentiation and zero memory allocations during runtime, ggml.ai enhances simplicity and efficiency, perfect for anyone aiming to innovate in AI applications.

ggml.ai offers open access under the MIT license, encouraging contribution and collaboration. There are no specific subscription tiers highlighted, allowing users to explore and experiment freely. For users looking for deeper support, financial contributions to existing contributors help enhance development efforts for ggml.ai.

The user interface of ggml.ai prioritizes simplicity and ease of navigation, facilitating a seamless experience. The clean layout ensures that developers can quickly access resources and tools, while user-friendly features enhance interaction, making the exploration of machine learning capabilities straightforward and efficient on ggml.ai.

How ggml.ai works

Users interact with ggml.ai by first accessing the platform's open-source tensor library, where they can explore its documentation and resources. After onboarding, developers can implement advanced machine learning models using the efficient algorithms provided. The platform’s simplicity allows users to focus on innovation without being bogged down by complex processes.

Key Features for ggml.ai

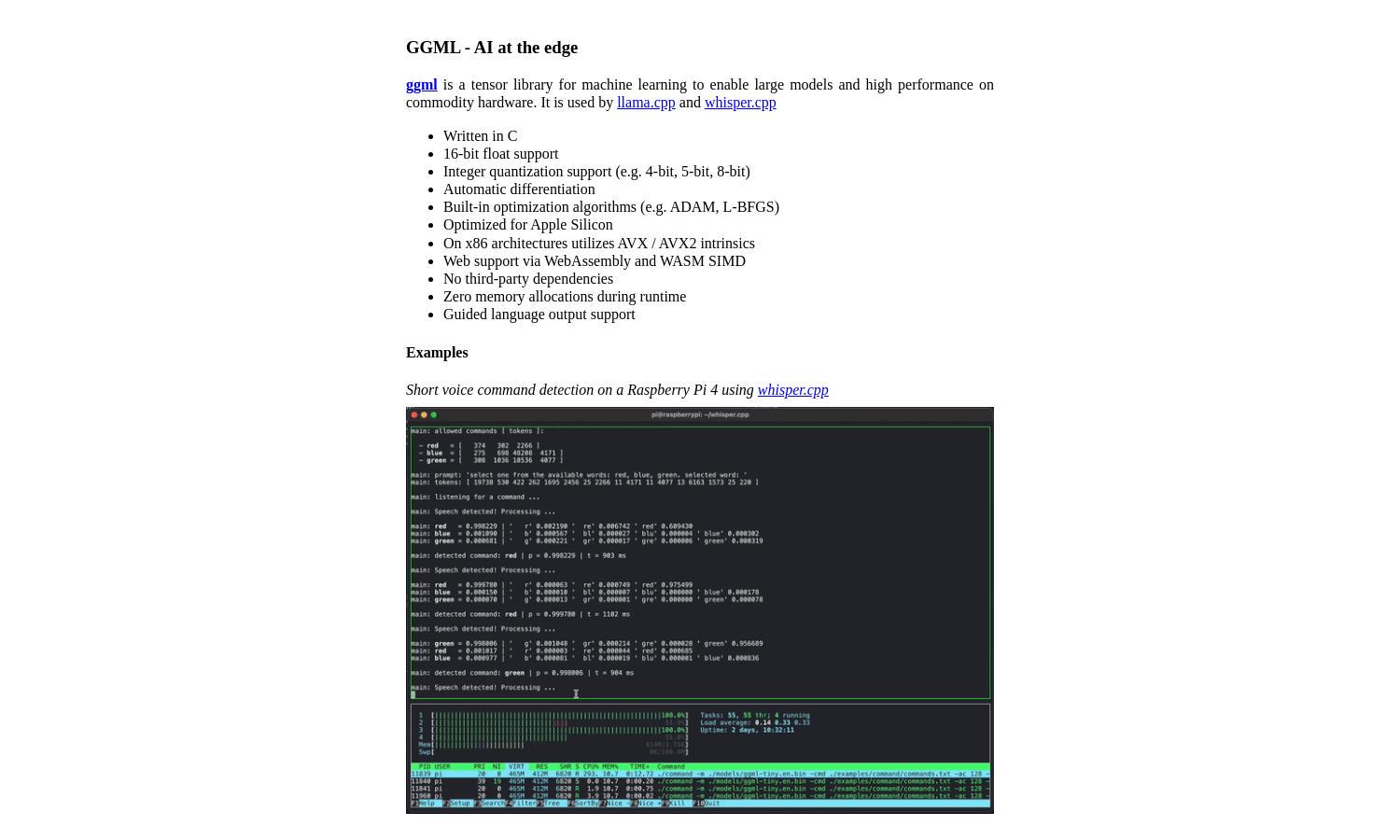

High-performance inference

High-performance inference is a core functionality of ggml.ai, enabling efficient execution of machine learning models like Whisper and LLaMA. This feature allows developers to achieve significant performance gains on various hardware, ensuring effective implementation of AI applications and reducing latency for real-time processing.

Automatic differentiation

Automatic differentiation is a standout feature of ggml.ai, streamlining the process of gradient calculation for machine learning models. This functionality simplifies model optimization, aiding developers in creating more effective algorithms, thus enhancing user experience and efficiency in deploying AI solutions.

Integer quantization support

The support for integer quantization in ggml.ai uniquely enables models to run faster and consume less memory. This key feature optimizes machine learning performance on standard hardware without sacrificing accuracy, making ggml.ai an invaluable tool for developers looking to deploy efficient AI applications.

You may also like: